A 3 Week Series

{{< admonition type=“tip” >}} This article was first published as part of a substack experiment, I reproduced it here. {{< /admonition >}}

Hey everyone,

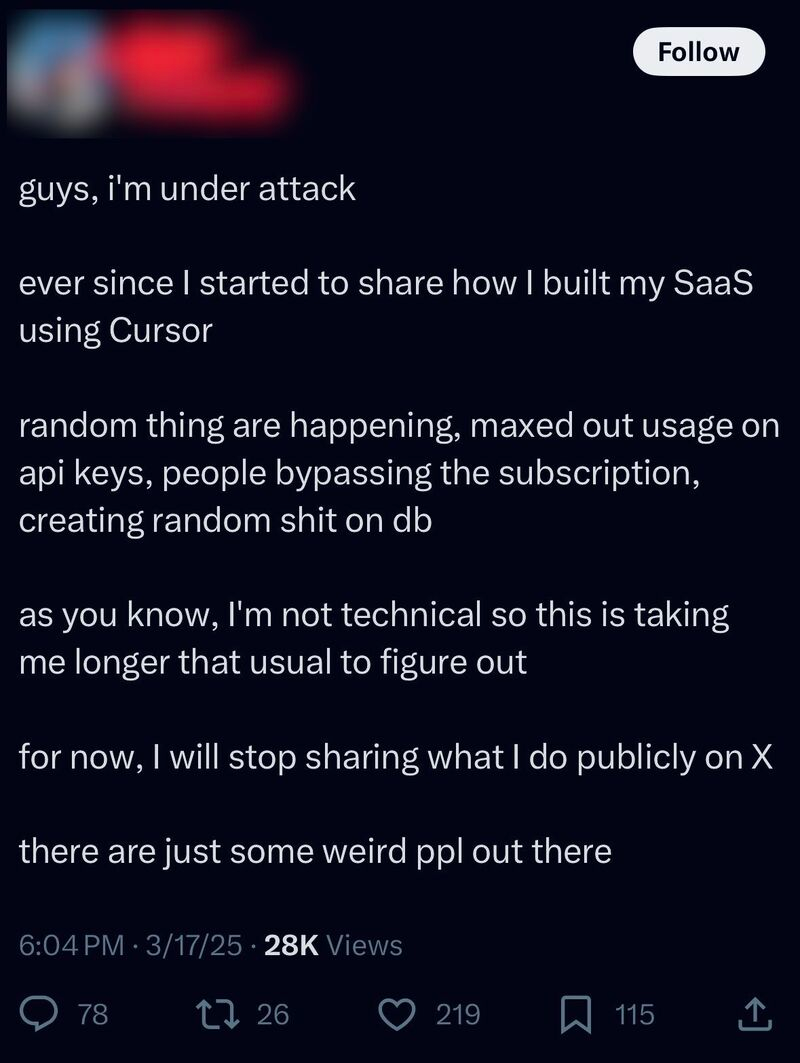

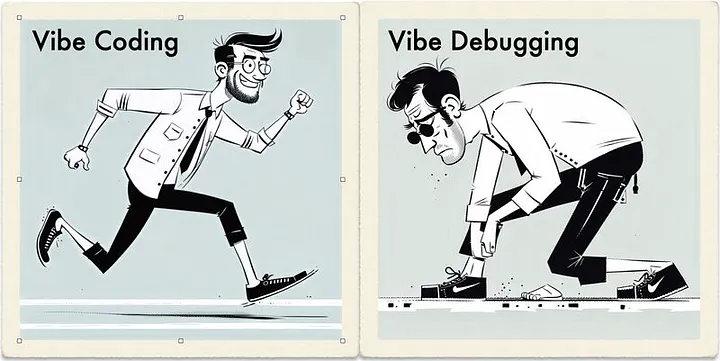

Let's be honest. This new wave of generative AI is moving incredibly fast. One minute we're asking it to write a poem, and the next, AI “agents” are being built to act on their own.

I've been working in tech for a long time, but some of the security risks I'm seeing are… different. They're strange, new, and frankly, a little scary.

Your old cybersecurity playbook? It’s not going to cut it. Trying to use old security methods on these new AIs is like trying to put a bike lock on a cloud. The problems are just in a different dimension.

What is not from another dimension? Receiving this entire 12 post series in your mailbox…

So, I decided to put together a guide. For the next three weeks, I'm going to walk you through the security risks of this new AI world. I'll look at the real threats and, more importantly, how to deal with them. On Monday, Wednesday, Friday and Saturday a bite-sized newsletter drops which gets you up to speed on a single topic. A quick read and plenty of discussion at the coffee machine (or in slack if you are home)!

Here’s what you can expect in the coming 12(!) posts:

- Week 1: Getting a Handle on the Basics Why is securing an AI so different from a regular app? We'll jump right into the most common weak spots, like tricking an AI into doing something it shouldn't (Prompt Injection) or making it spill secrets it's supposed to keep. Then, we’ll talk about AI agents—what happens when AI starts doing things on its own?

- Week 2: When Things Get Weird This is where it gets really interesting. We’ll look at what happens when AIs team up and their problems multiply. We'll cover AI Hallucinations (what happens when an AI just makes stuff up) and how that can cause a total mess. We'll also dig into scary stuff like an AI's goals being hijacked by a bad actor.

- Week 3: Building a Defense That Actually Works It's not all doom and gloom! We’ll spend this week focused on solutions. I'll show you how to protect your data when working with AI and what “Red Teaming” an AI looks like. (Hint: It’s about trying to break your own stuff to find the flaws first). We'll also look at some cool new tools and frameworks designed to keep AI systems safe. This series is for you if you're a developer, a security pro, or just curious about what's really going on under the hood of AI.

If you’ve been looking for a straightforward guide to the real security challenges of AI, this is it.

The first post is coming this monday. If you know anyone who should be part of this conversation, now would be a great time to share this with them.